AI models are only as intelligent as the data they learn from. For organizations deploying AI systems across global markets, this fundamental truth takes on new urgency: when your model needs to serve customers in Tokyo, São Paulo, and Berlin with equal precision, the quality of your multilingual training data becomes make-or-break.

The numbers support this, too. Research shows that 76% of online shoppers prefer content in their native language, and 40% will never purchase from sites in other languages. Yet despite this clear market demand, Gartner revealed that 85% of AI projects fail primarily due to poor data quality. The gap between global ambition and execution often comes down to one critical factor: multilingual data validation.

As enterprises race to build AI systems that can understand context, respect cultural nuances, and maintain brand voice across dozens of languages, validation has evolved from a nice-to-have quality check into a strategic necessity. The question is no longer whether to validate multilingual data, but how to do it effectively enough to compete globally.

What Is Multilingual Data Validation?

Multilingual data validation is the systematic process of verifying and refining training data across languages to ensure it’s linguistically accurate, semantically correct, and culturally appropriate before it shapes your AI’s behavior. Think of it as quality control for the knowledge your AI will internalize.

This goes well beyond standard translation or data annotation. When translators convert text or annotators label sentiment, they’re creating raw material. Validation is what happens next: the rigorous review that catches what humans or automated processes miss.

The distinction matters because the stakes are different. A translator might render words correctly while missing that a phrase carries different connotations in the target culture. An annotator might label data consistently without realizing the examples reflect cultural biases. Validation catches these gaps.

Here’s where human expertise becomes irreplaceable. Automated validation tools can spot empty fields and formatting errors, but they can’t grasp whether a joke still works in another language or whether local idioms have been handled appropriately. This limitation becomes especially acute for low-resource languages, where automated systems lack sufficient data to make reliable judgments.

Research backs this up dramatically. Studies show human-in-the-loop validation is 8 times more effective than automated flagging alone in some domains, and in healthcare applications, it raised precision from 92% to 99.5%. The consensus among AI practitioners is that human expertise remains essential for achieving the linguistic and cultural accuracy that global AI demands.

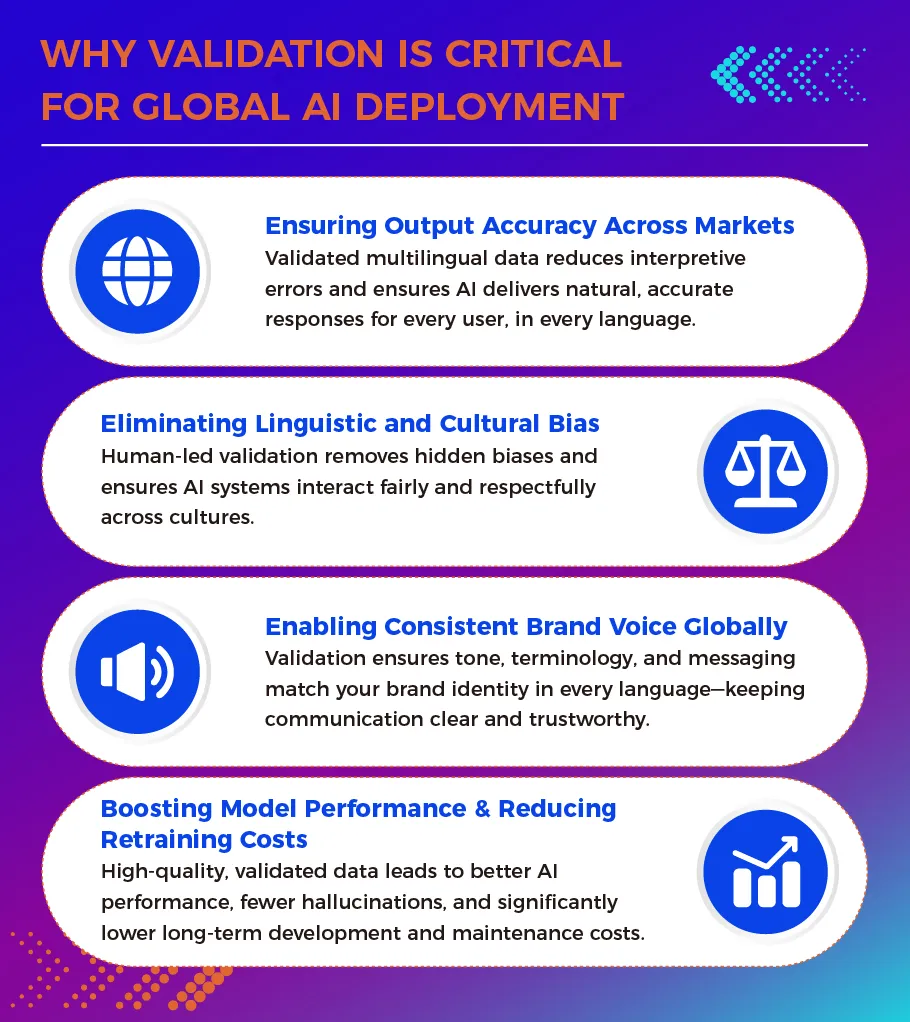

Why Validation Is Critical for Global AI Deployment

Ensuring Output Accuracy Across Markets

When AI trains on validated multilingual data, it develops a genuine “feel” for how language works in each market. Models gain broader examples of regional tone and nuance, leading to fewer interpretive errors. The practical result: a French user gets answers as accurate and natural as an English user would expect.

Without thorough validation, familiar problems emerge. Literal mistranslations confuse customers, like KFC’s infamous “Finger Lickin’ Good” becoming “Eat your fingers off” in Chinese, or Pepsi’s “Come Alive!” translating to “Pepsi brings your ancestors back from the grave.” Beyond embarrassing gaffes, unvalidated data leads to grammatical errors, untranslated terms, and outputs that feel awkward or irrelevant.

Quality validation also reduces AI hallucinations. When models learn from clean, verified examples, they’re less likely to fabricate information. Research confirms that high-quality multilingual training data produces lower hallucination risks and more reliable predictions in real customer environments.

Eliminating Linguistic and Cultural Bias

Bias enters datasets quietly but spreads quickly. When training sets over-represent English or Western contexts, AI naturally defaults to those norms. Models trained on uneven datasets absorb these patterns, potentially using Western idioms universally or misinterpreting questions from other dialects.

The consequences show up in real systems. Machine translators routinely map “doctor” to male pronouns and “nurse” to female ones, perpetuating gender stereotypes. Facial recognition systems trained mainly on lighter skin tones have famously failed on darker-skinned individuals. In conversational AI, lack of cultural context produces outputs that can insult or stereotype users from certain regions.

This is where validation proves its value. Human reviewers from diverse backgrounds spot biases that automated systems miss. They audit data for balanced, respectful representation across cultures. They flag stereotypes and cultural faux pas before they become learned behaviors. Iterative quality assurance catches issues that automated filters overlook, creating a feedback loop that keeps models accurate as new content arrives.

Enabling Consistent Brand Voice Globally

Global enterprises understand that brand voice drives customer trust. Multilingual validation makes global consistency possible by enforcing tone, terminology, and style guides across every language. Whether your brand voice is formal or friendly, technical or conversational, validation ensures localized content reflects it faithfully.

The alternative is risky. Inconsistent messaging confuses customers and dilutes brand identity. A casual, relatable message in English might accidentally become stiff or aggressive in another language. Worse, a harmless phrase in one culture could offend in another. A single misstep can damage trust with an entire demographic, and in the age of social media, such damage spreads fast and lasts long.

Leading global companies address this through structured processes: centralized style guides, approved glossaries, and validation workflows that check every output against these standards. The result is unified messaging that stays recognizable and culturally relevant across all markets.

Boosting Model Performance and Reducing Retraining Costs

Catching data problems early saves enormous effort later. When multilingual data is validated before training, errors get fixed when they’re cheap to address—not after deployment when they’re exponentially more expensive.

The financial case is stark. Gartner estimates that poor data quality costs organizations an average of $12.9 million annually, with a staggering $3.1 trillion impact on the U.S. economy alone, according to IBM.

Conversely, clean data improves long-term performance measurably. Research demonstrates that when datasets are balanced across languages and carefully validated, model accuracy climbs significantly. Models trained with diverse language coverage reason more consistently and avoid the “digital divide” in accuracy that plagues systems built on narrow datasets.

What Effective Multilingual Validation Looks Like

Native-Level Linguistic and Subject Matter Expertise

The foundation of quality validation is native-language fluency. Only native speakers reliably detect subtle issues, like the idioms, humor, double meanings, and registers that non-natives miss. Native-speaker validators understand when an expression feels awkward or when a translation has missed an implied tone, helping conversational AI sound natural rather than stilted.

For technical or regulated domains, language skill alone isn’t sufficient. Medical translators need anatomical knowledge; legal linguists must understand statutory language precisely. Subject-matter experts ensure validation catches not just language errors but conceptual ones, preventing dangerous mistakes in critical fields.

Custom Quality Frameworks for Each Language

Effective validation recognizes that languages differ fundamentally. Each has unique grammar, writing systems, and cultural context that demand tailored criteria. What constitutes an error in Japanese honorific usage doesn’t apply to Spanish. A Chinese product name might carry unintended meanings in its characters that English-style QA would never catch.

Strong frameworks typically include style guides, glossaries of approved terms, common error categories, and cultural notes. During validation, reviewers use these as checklists, ensuring each language is judged by its own standards rather than an English-centric yardstick.

Rigorous Multi-Stage Validation Workflows

Robust validation involves multiple complementary stages: automated checks for technical issues, linguistic reviews for correctness and consistency, semantic checks to verify meaning, cultural reviews for appropriateness, terminology audits against brand glossaries, and functional testing for real-world usage.

These stages often loop back when issues emerge. The key is that each stage addresses what the others can’t, creating comprehensive coverage that catches errors before they shape model behavior.

Transparent Feedback Loops for Model Improvement

Validation doesn’t end at approval. Its findings feed back into the training cycle. When reviewers identify errors or ambiguities, those insights inform the next iteration of data refinement and model retraining. Teams track metrics like error rates per language, watching them decline with each validation cycle. This continuous improvement loop ensures AI systems evolve rather than stagnate.

Conclusion

The evidence is clear: multilingual data quality forms the foundation of global AI performance. Models trained on balanced, well-validated multilingual datasets consistently outperform those that aren’t. Academic research from Stanford and MIT confirms that diverse language coverage and cultural grounding are essential for accurate, fair AI across geographies.

For enterprises expanding globally, multilingual validation is how you ensure trust and consistency in every market. It’s how you protect your brand, comply with local norms, and extract maximum value from AI investments. As AI becomes truly global, validation transforms from a quality checkpoint into a strategic enabler.

The question for you is straightforward: Will your AI inspire confidence worldwide, or will it stumble over preventable errors in markets you can’t afford to lose?

Ready to ensure your AI succeeds globally? Contact Clearly Local to learn how our multilingual data validation services help enterprises deploy AI systems that perform reliably across languages, cultures, and markets.

Contact Us | Sign up for our Webinar on “Driving High-Performing AI with High-Quality Multilingual Data”