AI content moderation is everywhere—from social media platforms to e-commerce reviews—but it’s built on a shaky foundation. Around 90% of AI training data is in English, while the internet itself is a multilingual, multicultural space. This disconnect creates blind spots that can lead to serious consequences: misflagged posts, unchecked hate speech, and even legal risks in regions where language and culture shape meaning in ways AI can’t yet grasp.

As platforms scale globally, the need for smarter, safer, and more inclusive AI moderation solutions becomes critical. In this post, we’ll explore how multilingual data training can help bridge the gap, reduce bias, and build AI systems that truly understand the communities they serve.

The Foundations of AI in Content Moderation

What We’re Really Dealing With

Content moderation encompasses the massive task of reviewing and managing user-generated content across all formats: text posts, images, videos, audio clips, and everything in between. It involves identifying content that violates platform policies or legal standards, from obvious spam and hate speech to more nuanced violations that require cultural context to understand.

The scale of this challenge is staggering. With billions of pieces of content uploaded daily, manual moderation simply isn’t viable. Imagine trying to hire enough human moderators to review every TikTok video, every Instagram story, every Twitter reply in real-time. It’s mathematically impossible and economically unfeasible.

AI’s Strategic Advantage

This is where AI becomes not just helpful, but essential. AI-powered content moderation systems offer three critical advantages: speed, scalability, and risk reduction. These systems can process content at inhuman speeds, significantly reducing compliance and brand risks by detecting problematic material at scale.

Consider this compelling statistic: AI-powered digital asset management solutions can accelerate go-to-market timelines by 37%, allowing teams to publish content in real-time with confidence that questionable material will be flagged or rejected. That’s both efficient and a competitive advantage.

The Moderation Spectrum

Platforms employ several distinct moderation approaches, each with different levels of AI and human involvement. Here’s a brief look at their functions:

- Pre-moderation: Reviews content before it goes live, stopping harmful material in its tracks but potentially slowing down user experience.

- Post-moderation: Allows immediate publishing but reviews content afterward, removing violations after the fact.

- Distributed moderation: Leverages community voting for content visibility, with a smaller central team of employees (admins) for high-level policy enforcement. Platforms like Reddit have had success with this approach.

- Hybrid moderation: Combines the speed and scalability of automated tools with the contextual understanding and nuanced judgment of human reviewers.

The hybrid model represents the sweet spot: AI handles high-volume, routine filtering while escalating complex, nuanced cases to human moderators. This approach offers organizations instant identification capabilities paired with nuanced human judgment where it matters most.

The Multilingual Imperative

Language Is More Than Words

Here’s where most content moderation systems fail spectacularly: they’re up to 30% less accurate in moderating content in non-English languages due to overwhelming bias toward English in training datasets. This lack of multilingual fluency leads to companies like Meta applying basic machine translation to non-English content for flagging, which hasn’t gone well for them.

Such approaches stem from a basic and costly misunderstanding about the nature of language. Human intent can’t be captured just by converting words from one vocabulary to another. One requires a deeper understanding of context, slang, idioms, and constantly evolving cultural references that are deeply embedded in local communities.

Take this real-world example: in parts of Canada, the word “Pepsi” is used as a slur against Indigenous communities, while having no such meaning elsewhere. This pejorative term would be completely misunderstood by AI systems trained primarily in standard French or English.

These subtle but critical shifts in meaning are nearly impossible to detect without deep regional and cultural familiarity.

Hidden Security Risks

Recent research from Johns Hopkins reveals that Large Language Models are significantly more susceptible to jailbreaking attacks in low-resource languages like Armenian and Maori compared to widely-used languages like English and Spanish. This creates a dangerous paradox: not only is a platform’s ability to proactively moderate in these languages degraded, but its defenses are also weaker against deliberate, coordinated attacks. The communities that need the most protection often receive the least. This highlights the importance of responsible AI moderation.

Regulatory Pressures and Compliance

Navigating Global Legal Frameworks

Content moderation operates within a complex web of global regulations. The United States, with its First Amendment protections, maintains a relatively permissive approach, while countries like Iran enforce strict state-controlled censorship. Most jurisdictions, including the European Union, fall somewhere between these extremes, creating a fragmented legal landscape that platforms must carefully navigate.

The DSA Game-Changer

The EU’s Digital Services Act represents a paradigm shift in content moderation regulation. This transformative legislation imposes significant obligations on Very Large Online Platforms, including mandatory annual systemic risk assessments and transparent reporting requirements. Crucially, the DSA explicitly requires platforms to disclose the “qualifications and linguistic expertise” of their content moderation teams, a direct regulatory response to documented multilingual moderation failures.

The DSA’s influence extends far beyond Europe’s borders. To streamline compliance and reduce administrative burden, many platforms choose to apply EU standards globally, effectively exporting European regulatory values worldwide and creating a de facto global regulatory floor for content moderation.

Real-World Compliance Failures

The stakes of getting this wrong are enormous. Internal documents from Meta have highlighted an obvious lack of investment in content moderation resources for major non-US markets, leaving communities vulnerable to hate speech and disinformation. The company’s reliance on automated translation to convert non-English posts has resulted in inaccurate decisions with real-world legal consequences in conflict regions like Ethiopia and Myanmar, where hate speech in languages like Amharic has proliferated unchecked.

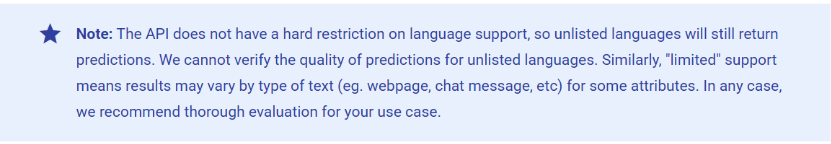

Interestingly, technology providers like Google acknowledge these limitations. Its moderateText API candidly states that while it works for “unlisted languages,” it cannot “verify the quality of predictions” for them, a frank admission of their underinvestment in multilingual data training.

Strategic Roadmap for the Future

Best Practices for Implementation

To navigate these complex challenges, platforms must commit to proactive, sustained investment in collecting and rigorously annotating high-quality multilingual datasets, with particular focus on low-resource languages. This requires detailed annotation manuals, consistent inter-annotator agreement standards, and ongoing commitment rather than one-time projects.

Policy Recommendations

Global platforms should view the DSA not as a regional burden but as a blueprint for establishing worldwide best practices. This includes proactively providing linguistic expertise data for all major markets, not just EU requirements.

Platforms must also fundamentally re-evaluate their content moderation labor supply chains, committing to ethical practices that ensure fair wages, robust psychological support, and career advancement opportunities for data labelers and human moderators. This shift from exploitation to partnership is essential for building truly sustainable and ethical moderation ecosystems.

Conclusion: Building a Truly Global Solution

The path forward is clear but challenging. Multilingual data training is a fundamental requirement for safe, smart, and localized content moderation. As our digital world becomes increasingly interconnected, the platforms that serve global communities have a responsibility to invest in systems that understand and respect the full spectrum of human expression across cultures and languages.

Is your platform ready for the next generation of content moderation? Don’t let language bias and cultural blind spots put your users—or your brand—at risk. Clearly Local specializes in multilingual AI training data services and ethical moderation solutions tailored to your global audience.

Contact us today to build smarter, safer, and truly inclusive moderation systems.