You paid for premium human translation services, but the final deliverable feels off. What you got might be unauthorized AI: text translated by a machine in seconds, with a quick polish to hide the evidence.

This isn’t a hypothetical scenario. As generative AI capabilities have exploded over the past few years, some language service providers (LSPs) have discovered a lucrative shortcut: billing clients at full human translation rates while secretly substituting machine translation (MT) or minimally edited AI output. The profit margins are irresistible, potentially reaching 95% when raw MT costs pennies compared to the premium rates commanded by skilled human translators.

For buyers of translation services, this deception isn’t just about getting less than what you paid for. It’s about inheriting profound risks that were supposed to stay with your vendor, including regulatory non-compliance, legal liability from mistranslations, confidentiality breaches when your documents are uploaded to public AI tools, and brand damage from cultural missteps or factual errors.

The good news? You don’t have to rely on blind trust. This article will equip you with practical strategies to detect AI output in your deliverables, audit vendor workflows for proof of human involvement, and enforce accountability through contracts and verification protocols. Because in today’s AI-saturated landscape, the only vendors worth your investment are those whose practices actually match their promises.

Why This Matters

Let’s be clear about what’s at stake when an LSP swaps human translation for undisclosed AI.

Compliance and Legal Risks

When you’re translating legal contracts, regulatory filings, patent applications, or pharmaceutical documentation, precision is non-negotiable. Machine translation systems struggle catastrophically with jurisdiction-specific legal terminology, the contextual intent of clauses, and regulatory nuances. Research has found critical errors, including complete omissions of legal obligations, in 38% of MT-translated legal texts. Court systems have explicitly stated that machine translation cannot be relied upon for complex legal concepts that impact people’s rights.

But here’s what keeps compliance officers up at night: if your LSP secretly uploaded your confidential documents to ChatGPT or Google Translate to “assist” with translation, they’ve just breached your NDA. These public tools log interactions and may use your data for training purposes. That pharmaceutical trial data or merger documentation? It’s now potentially accessible outside your governance framework, exposing you to regulatory penalties and IP violations.

Quality Risks

Even when AI output reads smoothly, it can be dangerously wrong. Large language models (LLMs) are statistical prediction engines designed to maximize fluency, not accuracy. This creates what researchers call “hallucinations”, factually inaccurate content that sounds perfectly plausible.

According to Alibaba’s recent evaluations, top-tier multilingual models were found to produce hallucinatory content in up to 60% of their responses. These failures include “source detachment” errors where the AI adds information that wasn’t in the original or omits critical content. For financial reports, medical instructions, or technical specifications, such quality issues are liability time bombs.

Cultural and tonal failures present another dimension of risk. AI cannot render the creative tone, persuasive impact, or cultural sensitivity that brand-critical content requires. Your carefully crafted marketing message might emerge technically accurate but culturally tone-deaf, undermining years of brand building in a target market.

Financial Risks

The economics are straightforward: Full Human Translation (FHT) typically costs around $0.08 per word. Transparent, certified MT Post-Editing (MTPE) runs 65-75% of that rate. But raw MT output costs almost nothing.

When an LSP charges you the premium FHT rate but delivers lightly edited MT, they’re pocketing the massive difference while transferring all the risk to you. You’re paying for human expertise—contextual understanding, cultural nuance, technical terminology mastery—and getting statistical word prediction instead.

How to Spot AI Output in Translation Deliverables

Human detection of sophisticated AI output is notoriously difficult, and even trained professionals can struggle to identify it with high reliability. But AI leaves fingerprints—you just need to know where to look.

Linguistic Red Flags

Repetitive Structures and Mechanical Flow

Human translators naturally vary their sentence structures and transitions. AI systems, trained on massive datasets, tend toward statistically common patterns. Watch for:

- Overly balanced clause structures

- Repetitive openers like “In conclusion,” “It is important to note,” or “In today’s world”

- Excessive use of punctuation marks like the em dash (an issue recently addressed by OpenAI)

- Unnecessary repetition of similar phrasing within short passages

Literal Translations and Awkward Phrasing

Machine translation excels at word-for-word conversion but struggles with contextual meaning. Red flags include:

- Idioms translated literally rather than adapted to equivalent expressions in the target language

- Preposition errors that suggest statistical guessing rather than grammatical understanding (e.g., “responsible of” instead of “responsible for”)

- Phrasing that’s technically grammatical but sounds unnatural to native speakers

- Inconsistencies in terminology across the document

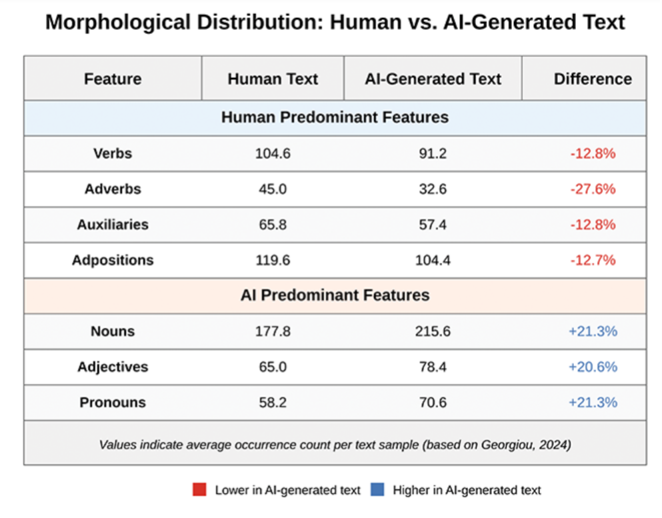

Low Lexical Diversity

This is one of the most reliable statistical indicators. AI-generated specialized content typically shows:

- Significantly less vocabulary creativity compared to human writing

- Overuse of the most common domain-specific terms rather than synonyms or varied expressions

- A low ratio of content words to function words

- Formulaic use of expressions that appear frequently in training data

Technical Detection

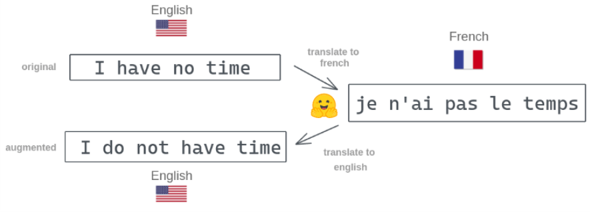

Back-Translation Protocols

This is your most powerful forensic tool. Back-translation involves taking the delivered translation and running it back through an independent MT engine to the source language, then comparing the result to your original text using Natural Language Processing (NLP) analysis.

Why it works: Machine translation introduces unique statistical signatures into text. When that text is reverse-translated, measurable deviations confirm machine origin, even after human post-editing. Research demonstrates that automated back-translation can differentiate between human and MT-assisted texts with approximately 75% accuracy.

This high detection rate makes back-translation a potent deterrent. When vendors know you’re running BT protocols, the risk of getting caught makes the fraud far less attractive than the reward.

AI Detection Tools

Several NLP-based tools are now available that analyze linguistic patterns to identify AI authorship. While not perfect, they provide quantifiable probability scores that can supplement your evidence when questioning a vendor’s methodology.

Auditing Vendor Workflows

Here’s the fundamental principle: Don’t just evaluate the final deliverable; audit the process that created it.

Why Process Audits Matter

A skilled LSP can lightly post-edit MT output to eliminate obvious errors, making the result difficult to distinguish from human work through quality review alone. But the digital workflow doesn’t lie. Translation Management Systems (TMS) create audit trails that reveal exactly how the work was performed.

Tools and Methods

TMS Audit Logs

Modern translation platforms track everything:

- Version history showing who made changes and when

- Time stamps revealing work pace

- Edit distance metrics showing how much text was changed versus left unchanged

- Approval workflows and reviewer activity

Contractually require full access to these logs for projects billed at FHT rates. The transparency requirement alone will deter fraudulent vendors.

Language Quality Estimation (LQE) Scores

Advanced TMS platforms include LQE agents that predict translation quality and highlight segments needing attention. For human translation projects, you expect to see:

- Moderate initial quality scores (human translators work from scratch)

- Substantial editing activity throughout the document

- Consistent effort across sections

Suspiciously high initial LQE scores suggesting minimal required editing? That’s a red flag that the “translator” started with machine-generated text.

Red Flags in Workflow

Unnaturally Fast Turnaround

Professional human translators typically handle 2,000-2,500 words per day for quality work requiring research and terminology management. If your vendor delivered 10,000 words overnight, something’s wrong.

Minimal Editing Patterns

If audit logs show a translator “worked” for eight hours but made only minor edits affecting 5% of segments, they likely started with MT output rather than translating from scratch.

Missing Review Steps

ISO 17100, the international standard for translation services, requires specific quality control steps. If contracted reviews or quality checks are mysteriously absent from the workflow logs, investigate.

Strategic Recommendations for Buyers

- Update your contracts immediately. Don’t wait for the next RFP (Request for Proposal) cycle. Add AI governance clauses, audit rights, and breach penalties to existing vendor agreements through amendments.

- Build technical verification into your quality assurance workflow. Back-translation protocols and linguistic forensics should be standard operating procedure, not exceptional measures. The investment in detection tools is minimal compared to the risks of undetected fraud.

- Shift from trust-based to verification-based vendor relationships. Though you shouldn’t assume bad intent, it’s important to recognize that the financial incentives for cutting corners are enormous, and your fiduciary responsibility is to verify compliance.

It’s also important to note that when large localization providers quietly replace human work with undisclosed AI output, the damage doesn’t just fall on clients; it also falls on the linguists whose livelihoods have already been squeezed by rapid automation. Any remaining opportunities for professional translators to earn a stable living shouldn’t be undermined by opaque corporate practices designed to boost margins at their expense.

- Distinguish between appropriate and inappropriate AI use. Transparent, certified MTPE has legitimate applications for high-volume, lower-stakes content. What’s unacceptable is deception: billing for human work while delivering machine output, especially for regulated or confidential content.

Conclusion

While the vast majority of providers in the language services industry are trustworthy, the same AI capabilities that enable tremendous productivity gains have also created opportunities for a few bad apples to profit through deception.