Remember the days when waiting for your favorite foreign-language film or show to be dubbed or subtitled felt like an eternity? For global studios, that delay was a major bottleneck. The traditional localization process, while effective, often couldn’t keep pace with the industry’s insatiable demand for global content. The need for faster, more scalable solutions has never been greater.

But a revolution is underway. AI-powered multimedia localization tools are dismantling these old barriers, fundamentally reshaping how entertainment reaches a worldwide audience. By harnessing technologies like NMT, NLP, and Generative AI, these tools are enabling faster and more scalable adaptations. They also achieve a deeper cultural resonance. Their work goes beyond translating words to adapting voices, visuals, and emotional tones. As a result, global studios can now launch content simultaneously across continents, connecting with viewers on an unprecedented level.

What Are AI-Powered Multimedia Localization Tools?

Beyond Word-for-Word Translation

AI supercharges the complex process of multimedia localization. Think of it as the evolution from a basic calculator to a sophisticated computer.

Modern AI tools, built on neural networks trained on massive datasets of film, TV, and literature, understand context, nuance, and genre-specific styles. The advent of Generative AI (GenAI) takes this further, allowing systems to generate nuanced, interactive translations and even synthetic media. This means an AI can now grasp the difference in tone between a horror movie and a romantic comedy, ensuring the localized version carries the same emotional weight.

Core Capabilities: The AI Localization Toolkit

The toolkit of these AI platforms is impressively comprehensive:

- Automated Subtitle Generation: AI can now automatically transcribe dialogue, generate subtitles, and even identify and label different speakers, all within an online editor for quick human refinement.

- AI Dubbing with Lip-Syncing: This is one of the most striking advances. Synthetic voice technology can create natural-sounding dubbing that preserves the original actor’s tone and cadence. Even more impressive, some tools can match the generated speech to the actor’s lip movements for a seamless viewing experience.

- Visual and Cultural Adaptation: AI can analyze and dynamically adapt visual elements like colors and imagery for cultural relevance. For instance, it can suggest changing a color symbolizing mourning in one culture to an appropriate alternative for another, ensuring the visual storytelling resonates correctly.

- Real-Time Translation and Personalization: The future is leaning toward real-time adaptation, where content could be personalized on the fly based on user preferences, from adjusting a show’s thumbnail to tailoring its synopsis for different regional audiences.

Strategic Advantages for Global Studios

Speed and Scale: Launching Globally, Overnight

The most immediate impact of AI is on speed. Traditional localization could delay a global rollout by weeks or months. AI tools can slash this time-to-market by up to 80%, processing content in minutes rather than days.

This speed unlocks a powerful new strategy: the simultaneous multi-language release. Instead of a staggered, sequential launch, studios can now debut a show like Squid Game across dozens of countries on the same day. This enables a unified global marketing campaign, creating a synchronized, international fan community that can exponentially boost a show’s virality and cultural impact. The scale is huge; AI-first platforms can deliver content in over 300 languages, without having to proportionally expand their localization team.

Cost Efficiency: Doing More with Less

Alongside speed comes significant cost reduction. By automating time-intensive tasks like initial transcription and translation, AI can reduce localization costs by up to 60%. This isn’t about replacing people, but about reallocating resources. The savings allow studios to invest more in the creative and strategic aspects of localization, such as high-level cultural consulting and quality control.

Enhancing Viewer Experience Through AI Localization

Personalization and Cultural Adaptation

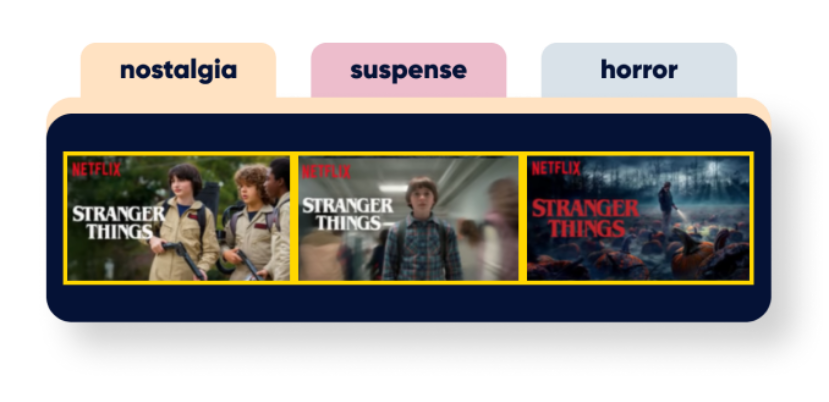

For viewers, the benefit is a more engaging and personalized experience. Streaming giants like Netflix use AI to analyze user behavior and dynamically adjust a show’s presentation. The thumbnail and synopsis you see for a series might be different from what another viewer sees, each tailored to emphasize plotlines or characters that resonate with personal preferences.

This goes deeper than algorithms. AI-powered sentiment analysis can help soften a bold message for a more reserved audience or ensure humor lands appropriately. The goal is cultural resonance, making the viewer feel like the content was made specifically for them.

Accessibility and Inclusivity

Perhaps one of the most laudable advances is in accessibility. AI can automatically draft detailed audio descriptions for visually impaired audiences, which are then refined by human writers, making content more inclusive at a scale previously too costly for every production.

A brilliant accessibility example is Spotify’s AI-powered podcast translation. The tool not only translates the podcast’s language but also clones the host’s voice, making them sound fluent in Spanish, French, or Hindi while retaining their unique vocal identity. This breaks down language barriers in an incredibly intimate way, expanding reach and accessibility for global listeners.

Spotify | Voice Translation for Podcasts

Case Studies in Entertainment

Streaming Platforms

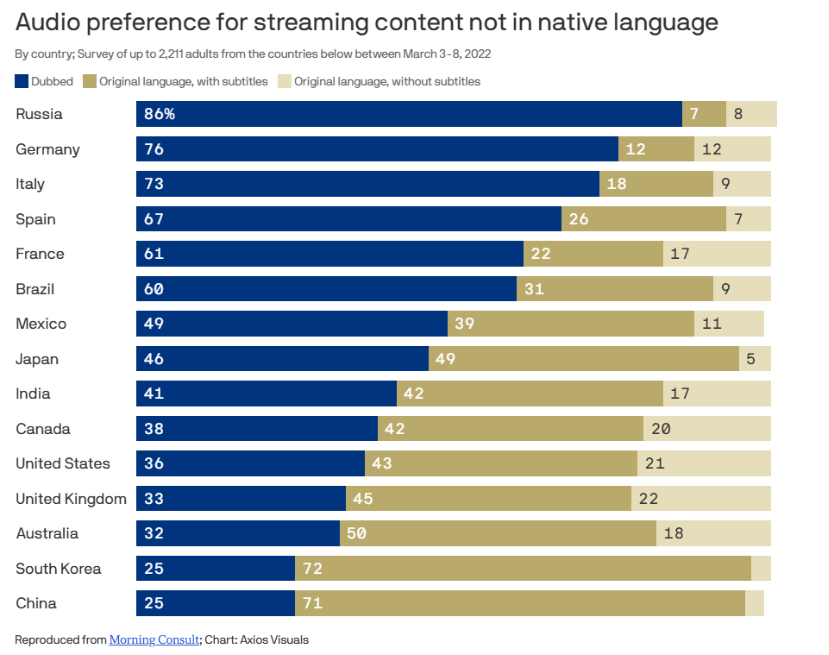

Netflix is the prime case study. Their investment in AI, like the DeepSpeak dubbing program, creates synthesized voices that sync with actors’ facial movements. Interestingly, Netflix’s strategy highlights a key market tension. While they’ve driven a 120% rise in dubbed content viewership, the preference for dubbing and subtitles varies widely between different regions.

This is one fact that often gets overlooked. The AI revolution isn’t about choosing one format over the other. It’s about using AI to make both options superior and instantly available. The power is in giving the viewer a high-quality choice.

Film and TV Production

Generative AI tools like Midjourney are used for rapid prototyping of concept art and storyboards. Marvel’s use of AI for the opening credits of Secret Invasion demonstrated this potential for creating distinctive visuals, even as it sparked debate about the role of AI in core creative functions.

Challenges and Ethical Considerations

Of course, this transformation isn’t without its challenges.

Quality and Creative Integrity

AI still struggles with the subtleties of human language and creativity. Humor, idioms, and cultural references can be lost in translation. Synthetic voices, while improving, can sometimes lack emotional expressiveness, leading to the “dubby effect” that pulls viewers out of the experience. The quality is only as good as the training data, and a lack of diverse cultural datasets can lead to generic or inaccurate outputs.

Legal and Ethical Issues

The rise of voice cloning and AI-generated content has ignited fierce debates over performer rights and intellectual property. Unions like SAG-AFTRA are advocating for rules that require performer consent and compensation when their voice is used to train an AI. Furthermore, many AI models are trained on copyrighted works without clear permission, leading to complex legal battles about what constitutes original creation.

Human-AI Collaboration: The Indispensable Symbiosis

This brings us to the most critical consensus in the industry: the “human-in-the-loop” model. The most effective workflows use AI for its speed and scalability but keep human experts at the heart of the process. Linguists and cultural consultants are essential for refining AI output, ensuring cultural sensitivity, and injecting the creative nuance that machines cannot replicate. Their role is evolving from manual translators to strategic managers of AI systems.

Future Outlook

AI localization will become more integrated and sophisticated. We can expect real-time, adaptive content that modifies itself based on live audience engagement. This technology is democratizing distribution, allowing independent creators to reach a global audience without a massive budget.

For studios, the path forward involves several key steps:

- Adopt Hybrid Workflows: Embrace platforms like ClipLocal, which are explicitly designed to combine AI automation with human-in-the-loop review and collaboration for audio and video projects. This helps ensure the highest quality.

- Invest in Ethical Frameworks: Proactively address issues of consent, compensation, and transparency to build audience trust and avoid legal pitfalls.

- Focus on Quality Assurance: Implement robust QA processes that blend AI-driven checks with human review, especially for culturally sensitive content.

Conclusion

AI-powered multimedia localization is far more than a productivity hack. It is a transformative force reshaping the global entertainment landscape. By breaking down the old barriers of time, cost, and scale, it empowers studios to tell stories that transcend borders. While challenges around quality and ethics remain, the future lies not in AI replacing humans, but in a powerful collaboration between them. This synergy promises a more inclusive, efficient, and richly connected global media world, where a great story from anywhere can find a home everywhere.